Posts

-

Tomorrow night is always bright, regardless of IDE.

27 May 2025

-

-

There are so many goodies in base R. Let's explore some functions you may not know.

17 January 2024

-

Interested in a custom feed of YouTube videos? GitHub Actions + flexdashboard = ❤️.

31 July 2023

-

Let’s fall together into a pit of success (when configuring macOS)!

22 December 2022

-

Working in Python but miss tidyverse syntax? These packages can help.

09 May 2022

-

Or, why `mtcars |> plot(hp, mpg)` doesn't work and what you can do about it.

18 January 2022

-

A cool paper used R and Python together --- and so can you!

28 September 2021

-

I am now a Sr. Product Marketing Manager at RStudio!

13 September 2021

-

Wikipedia has a lot of wonderful data stored in tables. Here's how to pull them into R.

27 July 2021

-

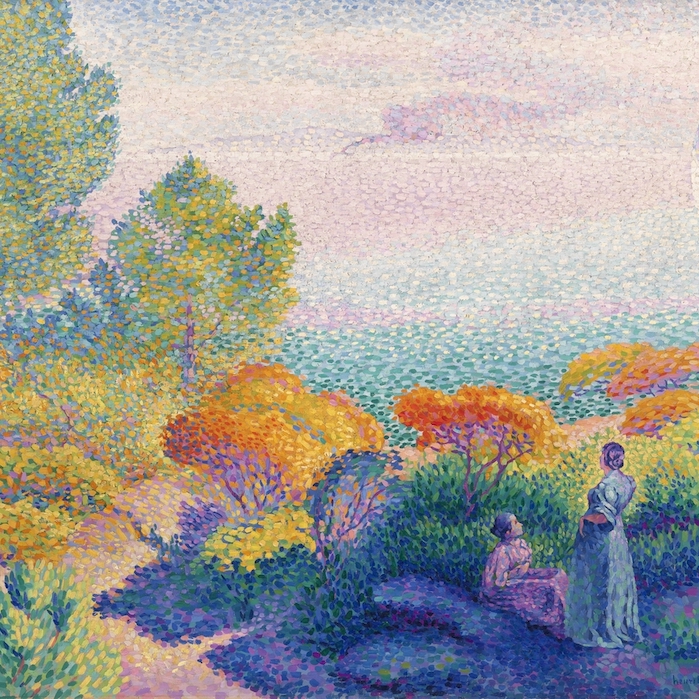

Generative art mixes randomness and order to create beautiful images. The \#rtistry hashtag helps find work from other Rtists.

09 May 2021

-

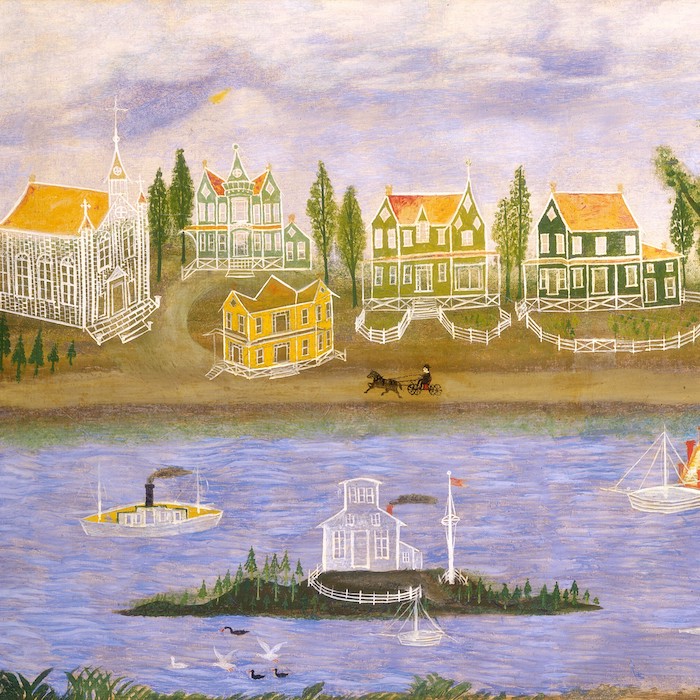

Let's explore some cool charts we can make in {ggplot2}.

28 March 2021

-

Lessons learned when we used {bookdown} to prepare a manuscript to submit for publishing.

08 March 2021

-

Setting up a Google Docs-like coding environment in VS Code.

03 February 2021

-

Tired of trying to search all your liked Tweets on your timeline? Pull them into R instead!

10 November 2020

-

Thanks to contributions from Daniel Anderson, {leaidr} is even easier to use.

30 August 2020

-

Celebrating the R community.

13 July 2020

-

Census data is valuable, but can be stored in messy Excel spreadsheets.

28 May 2020

-

The {leaidr} package helps us easily create maps of U.S. school districts.

03 May 2020

-

-

My frequently-used reference for styling {ggplot2} charts.

27 January 2020

-

What's the most common type of district in the U.S.? Let's find out using R.

11 September 2019

-

-

Most of the time, data doesn't come in tidy spreadsheets. With R, though, you can pull data from PDFs to use in analyses.

16 December 2018

-

Exploring why disaggregation of data is important by looking at district demographics.

21 September 2018

No matching items